-

搜索

Search

Advancing AI at the IoT Edge

Nov 15, 2023

By Deepak Mital

In a highly connected world, there is a need for more intelligent and secure computation locally and preferably on the very devices that capture data, whether it be raw or compressed video, images, or voice. End markets continue to expect compute costs to trend down, at a time when computation demands are increasing, as is evident from recently popularized AI paradigms such as large language models (LLMs). Beyond well-understood benefits of compute at the edge – such as lower latency, increased security, and more efficient use of available bandwidth – AI can help consumers and enterprises realize benefits ranging from hyper-personalization and enhanced privacy to lower overall service costs, driven by optimized accesses to cloud services.

System-level solutions that make optimized edge AI implementations possible would need to address audio, voice, vision, imaging, video, time series, and now language processing, locally. Synaptics solutions are designed with AI as a core tenet, as IoT markets like smart home automation, appliances, signage, wearables, process control, and others evolve to integrate edge inferencing. Adaptive AI frameworks form a foundational pillar of the Synaptics Astra™ platform and take a holistic approach to enabling system developers.

Let us explore the critical elements of a well-designed AI-native edge solution.

Machine Learning Models and Development Tools

While silicon resources at the device edge are at a premium, typical model sizes are increasing, necessitating purpose-built versions that run on resource-constrained silicon. Frameworks and methodologies such as Neural Architecture Search (NAS) carry the promise of generating right-sized models for target hardware while meeting system service-level agreements. NAS targets the building of neural networks via constrained searches for the target hardware, achieving latency and accuracy goals.

Jumpstarting the edge AI journey relies on the availability of reference models that use industry-standard frameworks. Subsequent phases require retraining, optimizing, and often building custom versions. It is essential that AI platforms offer sufficient flexibility to transform raw environment data into optimized models, as well as to deploy existing models onto edge silicon with minimal effort.

Deep expertise in vision, audio, imaging, video, and low-power technologies puts Synaptics in a leading position to deliver the tools to incorporate AI into essential IoT functions. Areas of active development across vision, audio, and time series data focus on segmentation, pose estimation, object detection, active noise reduction, key word identification, background noise removal, fraud detection, and predictive maintenance.

Software for Secure Model Execution

The runtime software needs to address multiple facets related to edge inferencing. AI models are the backbone of the applications and are often kept proprietary by industry players to build and sustain competitive advantages. These are often developed independent of the application, and called into service as needed, requiring special handling when it comes to running securely. Runtime software from Synaptics has security built-in by encrypting the model and deploying via secure authentication.

Heterogeneous AI-Native Silicon

As ML models evolve, new functions and operators get added. Often, these new functions are more efficiently run on certain classes of hardware, such as NPUs, GPUs, standard cores, or DSPs. It is critical that these models run efficiently without the developer having to manually specify underlying accelerator configurations. Complicating the solution is the need to run multiple models stitched together that make up the entire application. Data passing and memory allocation across these domains make application development even more challenging.

Application runtime software from Synaptics, shown below, allows developers to overcome the complexities mentioned above. Let’s take a closer look.

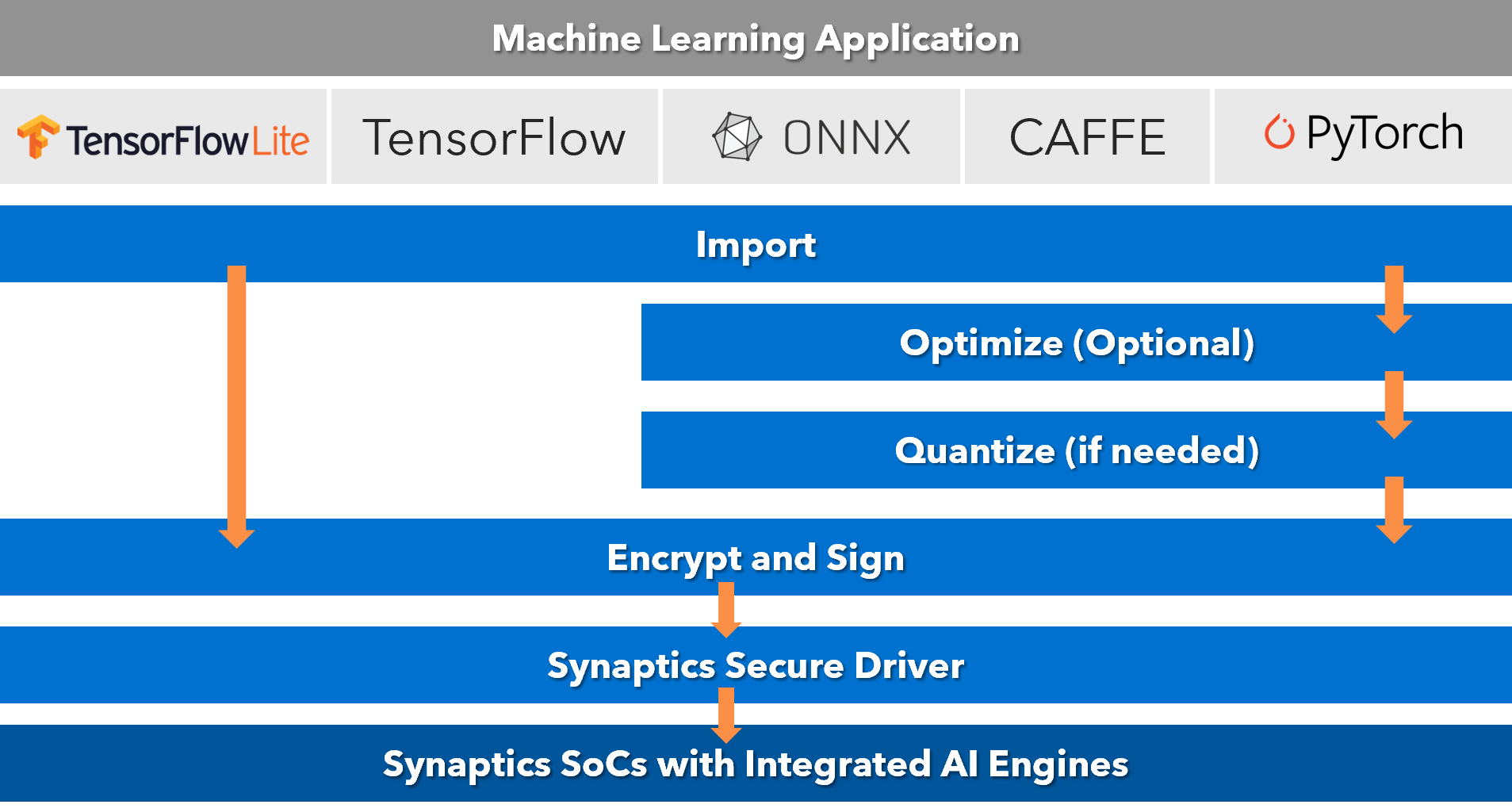

Synaptics AI software accepts commonly used input frameworks such as TensorFlow, TFLite, TLFM, ONNX, CAFFE, and PyTorch. Developers can choose to optimize the network across neural network layers and quantize to the data size supported by the silicon if the input network was not already quantized. The resulting network is encrypted and stored in memory. At runtime, the driver decrypts the network via the securely stored keys. Synaptics delivers a full-featured, cross-platform toolkit to support the end-to-end edge AI pipeline, with the singular goal of making AI integration easy for the IoT.

Summary

There is a need for intelligent run-time software along with tools for model building. Along with providing the silicon to implement next-generation edge computing applications, Synaptics is committed to providing the requisite software framework to enable complex applications in IoT markets. Follow our developments and contact Synaptics sales to learn more this January at CES 2024.

About the Author